AI RESEARCH BRIEF: The Transparency Dilemma – When AI Disclosure Reduces Trust

Basically, people who disclose using AI are trusted less than those who stay silent

A paper by Schilke and Reimann (May 2025) reports 13 preregistered experiments with more than 3,000 participants, across contexts from classrooms and hiring to investment and creative work. The studies consistently show that people who disclose using AI are trusted less than those who stay silent, even though disclosure is often seen as the ethical choice.

This follows my earlier brief on AI in dissertation writing: the core skill now isn’t hiding AI use, but learning how to use it legitimately, ethically, and visibly without losing trust. But when and how will transparency lose its stigma? When LLMs and AI become more trustworthy? When they become smarter than humans across domains?

The Findings

A study across 13 experiments across a variety of task domains shows that people who disclose using AI are trusted less than those who don't. This "transparency dilemma" is linked to a perceived lack of legitimacy, as audiences view AI-assisted work as less socially appropriate. While the trust penalty is attenuated among individuals who have a positive attitude toward technology or who believe AI is accurate, the negative effect of disclosure is not eliminated.

Study 1 (N=195 students): Professors who disclosed using AI for grading were rated less trustworthy (M=2.48 on a 1–7 scale) than those who disclosed using a human assistant (M=2.87) or made no disclosure (M=2.96). This represents a significant trust penalty with a large effect size (d=0.77–0.88).

Study 2: Job applicants who disclosed AI use were trusted less by hiring managers compared to applicants who did not.

Study 6, 7, and 8: The research traced the trust penalty to a reduction in legitimacy, as audiences perceived the disclosed AI use as less socially appropriate.

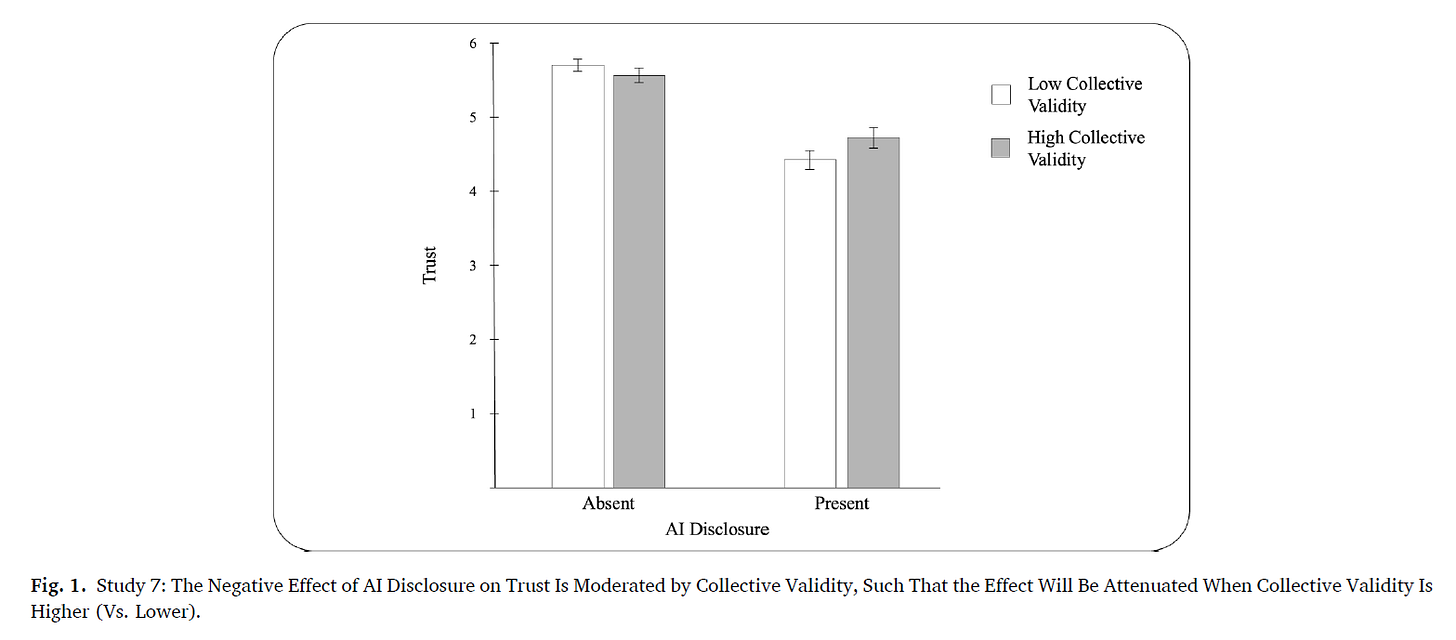

Study 7 (N=426): Startup founders who disclosed AI use received lower trust scores (M=4.55) than those who made no disclosure (M=5.63). This was a drop of over a full point on a 7-point scale with a very large effect size (d=0.93). The study's illustrates this gap and shows that the penalty was attenuated—but not eliminated—by priming participants with "collective validity" (making AI seem more common). The trust penalty shrank from a very large effect size (d=1.12) to a still-large but smaller one (d=0.72) when validity was high.

Study 8: This study used a behavioral measure of trust. Students invested less real money when they were told an investment fund used illegitimate methods ($3.39 on average) compared to when the methods were deemed legitimate ($4.09 on average). This demonstrates that the trust penalty can impact actual behavior, not just survey responses.

Study 9 (N=518): Six different disclosure framings (e.g., “AI helped proofread,” “transparency note,” “human revised afterward”) all resulted in lower trust than no disclosure. The AI disclosure condition explained 16% of the variation in trust scores (η2=0.16), a substantial finding in behavioral research.

Study 5 (N=597): Even for a mundane task like sending a scheduling email to a coworker, disclosing AI usage led to a significant trust penalty.

AI Exposure vs. Disclosure: Across all 13 studies, disclosure consistently lowered trust, whether voluntary or mandatory. Study 13 showed that being exposed as having used AI without disclosure resulted in an even stronger penalty than self-disclosure, but disclosure still negatively impacted trust when compared to silence.

Figure 1 illustrates the trust gap in Study 7, where startup founders were either described as disclosing AI use or not. On a 1–7 scale, nondisclosers averaged M=5.63 while disclosers averaged M=4.55. The graph shows two bars with a clear separation of over one full trust point. The figure also visualizes how “collective validity” (making AI seem more common and accepted) moderated the effect: the trust penalty shrank from a very large gap (d=1.12) to a still-large but smaller gap (d=0.72). In plain terms: even when participants were primed to think “everyone uses AI,” disclosure still carried a serious trust cost

Solutions?

The mechanism is legitimacy. People view disclosed AI use as socially inappropriate, even when framed as honest transparency. Training audiences to see AI use as legitimate (e.g., stressing compliance, ethical norms, or accuracy) can soften the penalty but not eliminate it. A meta-analysis inside the paper shows the penalty is smaller among people with positive tech attitudes or strong beliefs in AI accuracy.

So What?

This research overturns the common assumption that “transparency builds trust.” For AI, disclosure harms trust in professional and educational settings. For organizations, mandatory AI-disclosure rules could backfire unless they are paired with communication strategies that build legitimacy.

Cognitive Bleed?

Managers and employees can use these findings to stress-test disclosure strategies, experimenting with different framings before rollout. Teachers and students can treat the dilemma as a live case study, weighing ethics against reputational risk. In both domains, disclosure should be seen not as a simple checkbox but as a communication act requiring careful framing.

Reference

Schilke, O., & Reimann, M. (2025). The transparency dilemma: How AI disclosure erodes trust. Organizational Behavior and Human Decision Processes, 188, 104405. https://doi.org/10.1016/j.obhdp.2025.104405