AI RESEARCH BRIEF: The wicked problem of AI and assessment

Why AI and assessment resist one right answer … but now what?

A new study from Deakin University (Corbin, Bearman, Boud, Dawson, 2025) argues that AI in higher-education assessment is a “wicked problem,” not a solvable glitch. The team interviewed 20 assessment leads across all faculties and mapped their experiences to Rittel and Webber’s ten wicked-problem traits.

The Findings

AI pressures assessment on every front, and the study finds all ten wicked-problem characteristics present: no definitive problem statement, no stopping rule, only better or worse trade-offs, no clean tests, and high stakes for each trial. Teachers reported contradictory framings, from workforce prep to integrity risk, and described workload, policy, and reputational consequences for any chosen design. Sample: 20 one-hour Zoom interviews, single large Australian university, cross-faculty.

Ten wicked traits mapped to teacher quotes, showing trade-offs across integrity, learning, workload, and scale:

Wicked problems do not have a definitive formulation.

They do not have a stopping rule that signals when they are solved.

Their solutions are not true or false, only good or bad.

There is no way to test the solution to a wicked problem.

They cannot be studied through trial and error, every trial counts.

There is no end to the number of solutions or approaches to a wicked problem.

All wicked problems are essentially unique.

Wicked problems can always be described as the symptom of other problems.

The way a wicked problem is described determines its possible solutions.

Those who present solutions to these problems have no right to be wrong.

Translation: there is no single fix. Every choice trades security, authenticity, creativity, and cost.

Solutions?

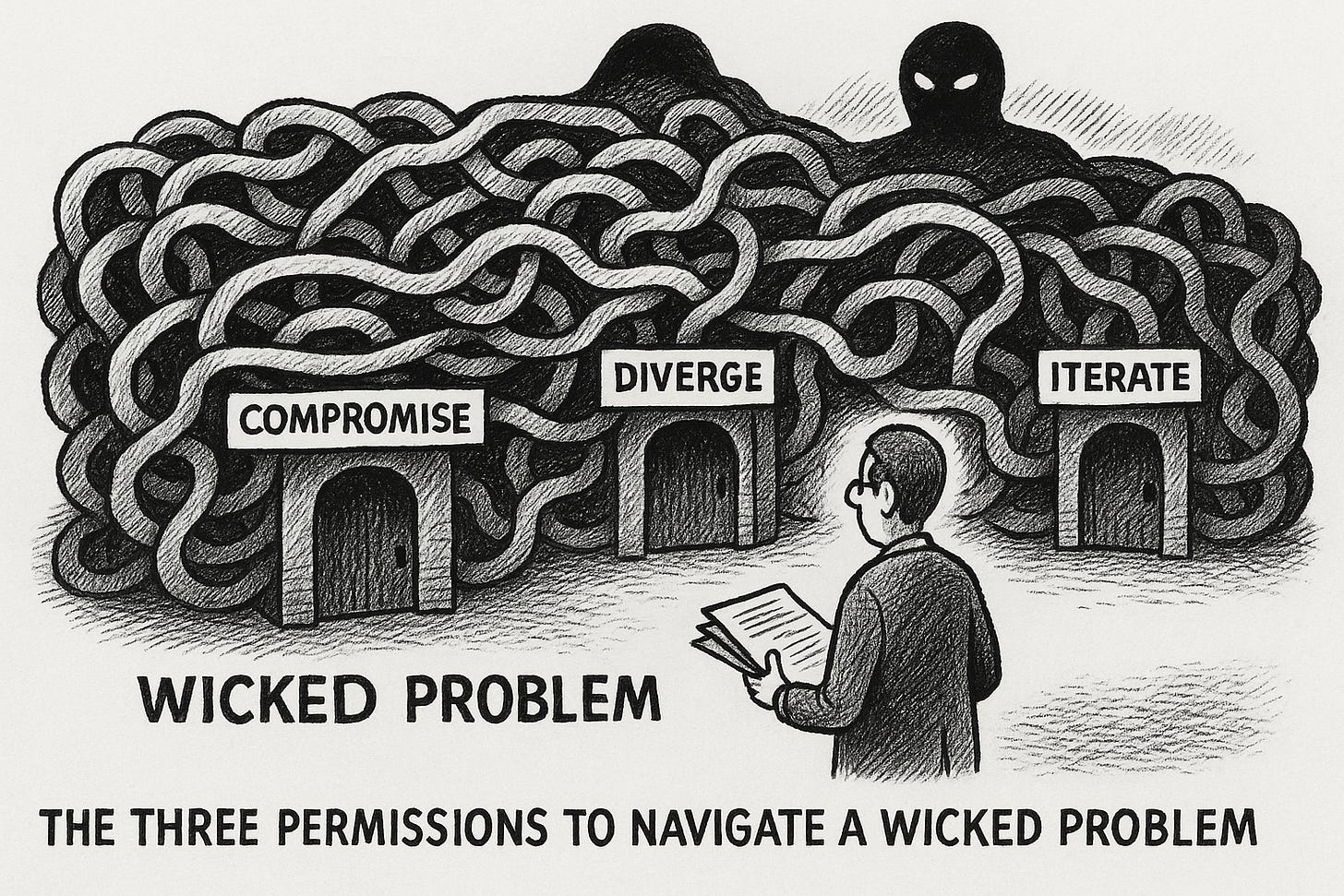

The authors propose three meta-design “permissions” that shift culture from silver bullet solutions to adaptive practice:

1. Permission to compromise, state explicit trade-offs and why they are acceptable.

2. Permission to diverge, fit designs to discipline, cohort size, and professional demands.

3. Permission to iterate, revise assessments as AI and behaviors change.

They emphasize that validity should outrank policing, and that detection alone is insufficient. Here, validity in assessment means the degree to which an assessment actually measures what it claims to measure. In the AI context:

Policing = catching misconduct, e.g., detecting AI-written text.

Validity = ensuring the task demonstrates student learning, skills, and reasoning, regardless of whether AI was used.

Let’s look at this contrast with examples:

A multiple-choice quiz may be easy to police but has low validity for measuring critical thinking.

An oral defense, design project, or portfolio has higher validity because it shows authentic student capability, even if AI assisted some steps.

So, prioritizing validity means designing tasks that align with intended learning outcomes, rather than relying only on detection systems.

Conclusion?

Treat AI-era assessment as ongoing navigation. Build institutional capacity for local variation, rapid revision, and transparent trade-offs. Reward professional judgment, not uniformity. This reduces policy churn and aligns assessment with real graduate capability needs.

Research Caveat?

The research caveat and limitations in The Wicked Problem of AI and Assessment can be broken down into three points:

1. Framing limitation: The study deliberately used the wicked-problem framework (Rittel & Webber, 1973) to analyze interviews. This lens highlights complexity, trade-offs, and irresolvable tensions, but it also filters what is noticed. Other frameworks, like organizational change or ethics, might surface different insights.

2. Scope limitation: All 20 participants came from a single large Australian university. They were assessment leaders or academics with assessment expertise, interviewed via Zoom in 2024. That gives depth but not breadth. The findings may not generalize to other institutions, countries, or student perspectives.

3. Data limitation: Qualitative interview data captures perceptions, framings, and narratives. It does not measure outcomes such as actual integrity breaches, workload impact, or learning performance. So, the results are interpretive rather than statistical.

The paper itself advises readers to see the wicked-problem framing as both useful and partial, a structured way of thinking rather than settled consensus.

However, a further caveat is that even if the “three permissions” are accepted, a deeper wicked problem remains: how to train educators to apply them in practice, to help them design assessments that balance integrity and authenticity, and to empower them to determine degree of validity rather than defaulting to policing. The wicked problem inside the wicked problem:

Layer 1: AI destabilizes assessment itself.

Layer 2: Even if we accept the “3 permissions” (compromise, diverge, iterate), there is still the unsolved challenge of training educators to use them skillfully.

Formulated more clearly, the wicked problem of assessment training is how can educators be prepared to:

Exercise the three permissions confidently in real settings (compromise, diverge, iterate),

Design assessments that balance workload, integrity, and authenticity, and

Judge and maintain validity rather than defaulting to policing?

In short, solving the first-order wicked problem of AI and assessment requires solving the second-order wicked problem of educator capability.

Cognitive bleed?

Corporate: use assessment meta-design in L&D. Pair AI-assisted tasks with oral or live defenses, require process evidence, and make trade-offs explicit in rubrics. Iterate each quarter based on failure modes.

Education: publish course-level AI use norms, diversify task types by discipline, and timetable “revise and defend” cycles that surface reasoning, not just output. Start small, document costs, and recalibrate next term.

References

Corbin, T., Bearman, M., Boud, D., & Dawson, P. (2025). The wicked problem of AI and assessment. Assessment & Evaluation in Higher Education. Advance online publication. https://doi.org/10.1080/02602938.2025.2553340

Very interesting. Will have to take a look thanks!

You're welcome. It's a good problem analysis.